What Are the Best AI Chip Solutions for Enterprises in 2025

NVIDIA, AMD, Groq, and Intel lead the market with advanced ai chip solution options for enterprises in 2025. NVIDIA’s B300 and H200 chips power large models and deliver top performance in multi-chip clusters. AMD’s MI300 series rivals NVIDIA in language model benchmarks, while Groq focuses on record-breaking inference speed. Each provider offers unique strengths in software support and scalability. Enterprises must match their ai chip solution with goals for performance and growth.

Key Takeaways

The AI chip market is growing fast as more companies use AI for tasks like language models, healthcare, and smart devices.

Top providers like NVIDIA, AMD, Intel, and specialized startups offer different chips that fit various business needs and workloads.

Enterprises should choose AI chips based on performance, scalability, cost, and strong software support to get the best results.

AI chips help businesses speed up data analysis, improve customer support, and automate tasks across many industries.

Matching AI chip choices with company goals and IT systems ensures smooth adoption and long-term success.

Market Overview

Growth Trends

The enterprise AI chip market is experiencing rapid expansion in 2025. According to Deloitte, the global market for enterprise edge AI chips is projected to reach tens of billions of dollars. This growth reflects the increasing importance of on-premises AI data center infrastructure. Several factors drive this surge:

Generative AI and Advanced Machine Learning: Organizations invest heavily in hardware to support large language models and generative AI, increasing demand for GPUs and specialized chips.

Industry-Wide AI Adoption: Sectors like healthcare, finance, manufacturing, and retail use AI for tasks such as diagnostic imaging and fraud detection, which boosts chip demand.

Edge AI and IoT Expansion: The rise of IoT devices and real-time processing needs in smart cities and factories fuels demand for edge AI chips.

High-Performance Computing: Enterprises and research institutions deploy AI accelerators in data centers for specialized workloads and innovation.

Recent funding rounds, such as Groq’s $640 million investment, show strong confidence in ongoing innovation. Enterprises are shifting from traditional CPUs to specialized chips like GPUs, NPUs, and custom accelerators. Over 75% of AI models are expected to rely on these by 2026. Companies also invest in custom silicon, such as Google’s TPU and Amazon’s Trainium, to optimize performance and control costs. Energy efficiency and supply chain resilience have become top priorities, with enterprises exploring open-source architectures and new cooling technologies.

Enterprise Adoption

Enterprises face both opportunities and challenges when adopting AI chip solutions. The main barrier is the difficulty in estimating and demonstrating the value of AI projects. Other obstacles include talent shortages, technical difficulties, and data quality or security issues. Many organizations struggle to move AI projects from prototype to production, with less than half reaching full deployment. Only a small percentage of enterprises are considered AI-mature, which correlates with better scaling and deployment.

Enterprises prefer flexible deployment options that balance performance, security, and scalability. They often choose open-source AI models and seek strong vendor support throughout the AI adoption lifecycle.

Technical barriers such as power and cooling constraints in data centers remain, but innovations like direct-to-chip liquid cooling are helping. Enterprises also manage supply chain risks by diversifying suppliers and investing in strategic inventory. As a result, organizations that align AI chip strategies with business goals and operational needs are best positioned to succeed in 2025.

Leading AI Chip Solution Providers

Market Leaders

The enterprise AI chip market in 2025 features several companies with strong positions. These companies lead in technology, partnerships, and market share. The table below shows the top providers and their roles:

Company | Market Role and Enterprise Market Share in 2025 |

|---|---|

NVIDIA | Undisputed leader in AI chip development; strong enterprise collaborations (e.g., Microsoft); extensive deployment of Blackwell GPUs in Azure data centers; leading AI GPU architecture and software innovation. |

AMD | Formidable challenger with competitive AI CPUs and GPUs; growing presence in AI PCs, data centers, and autonomous vehicles; notable performance improvements in AI training GPUs; expanding enterprise adoption. |

Samsung | Focus on AI chips for mobile and edge computing devices; mass production of advanced thin AI chips improving thermal efficiency; key player in mobile AI and edge applications enhancing user experience. |

Intel | Offers AI accelerators and processors for data centers, edge, and commercial PCs; strategic partnerships (e.g., Microsoft); product launches like Gaudi 3 and Core Ultra Processors with integrated AI; committed to regaining market share. |

TSMC | Largest contract semiconductor manufacturer; critical enabler of advanced AI chip production for all major designers; leadership in advanced fabrication technologies (3nm, 2nm); fundamental pillar supporting AI chip industry growth. |

NVIDIA stands out with its Blackwell GPU architecture and a mature software ecosystem. The company works closely with cloud providers and enterprises. AMD continues to grow with its MI300 series, which offers high memory capacity and strong performance for AI workloads. Intel focuses on affordability and supply stability, providing cost-effective alternatives like the Gaudi AI chips. Samsung leads in mobile and edge AI chips, making devices smarter and more efficient. TSMC supports the entire industry by manufacturing advanced chips for many providers.

Market leaders offer robust ai chip solution options that support large-scale AI training, inference, and integration with enterprise systems.

Challengers

Several companies challenge the market leaders by offering innovative products and targeting specific enterprise needs. These challengers include AWS, Huawei, and Broadcom. Each brings unique strengths to the table.

AWS (Amazon Web Services): AWS develops custom chips like Trainium and Inferentia. These chips power AI training and inference in the cloud. They help enterprises reduce costs and improve performance for large language models and recommendation systems.

Huawei: Huawei’s Da Vinci and Ascend AI chips focus on high-performance computing and edge AI. The company invests in AI chip solution development for data centers and smart devices.

Broadcom: Broadcom creates custom AI ASICs for hyperscale data centers. These chips handle specific workloads, such as transformers and recommender systems, with high energy efficiency.

Intel also appears as a challenger, especially with its focus on domestic manufacturing and supply chain security. The company invests heavily in new plants and aims to regain market share with affordable, reliable chips.

Challengers often provide alternatives that balance performance, cost, and energy efficiency, giving enterprises more choices for their ai chip solution needs.

Specialized Players

Specialized players design chips for unique AI workloads and emerging applications. These companies include startups and established vendors who focus on innovation and niche markets.

Provider Type | Key Providers | Differentiators and Offerings |

|---|---|---|

Cerebras, Groq, Graphcore, SambaNova, Untether AI | Use new architectures like wafer-scale chips, spatial AI accelerators, and processing-in-memory. These designs help enterprises scale up AI workloads and improve efficiency. | |

Hyperscalers' Custom ASICs | Microsoft (Maia), Meta (MTIA), Amazon (Trainium) | Build custom chips for their own cloud and AI services. These chips optimize performance for large language models, recommendation engines, and scalable AI workloads. |

Emerging Chip Types | NPUs accelerate smaller AI tasks with low power use. Enterprises use them for local AI, embedded systems, and sensitive data processing. |

Specialized providers help enterprises run AI workloads that need more than what general-purpose chips can offer. For example, Groq focuses on record-breaking inference speed, while SambaNova uses dataflow architectures for efficient AI processing. Microsoft, Meta, and Amazon design custom chips for their own platforms, making their cloud services faster and more cost-effective.

Common enterprise workloads that benefit from specialized chips include:

Natural language processing

Computer vision

Recommendation systems

Real-time inference for chatbots and autonomous systems

Specialized ai chip solution providers enable enterprises to match hardware with specific workloads, improving speed, efficiency, and scalability.

Key Features of AI Chip Solutions

Performance

Enterprises evaluate AI chip solutions using clear performance metrics. These metrics help organizations choose the right hardware for their needs. The table below shows important metrics for different AI models:

Metric Category | Model Type | Key Metrics | What It Measures | Use Cases |

|---|---|---|---|---|

Direct Metrics | Classification | Precision, Recall, F1 Score, False Positive Rate | Accuracy of predicted class labels vs. actual labels | Spam detection, fraud detection, medical diagnosis |

Direct Metrics | Regression | RMSE, Squared Error, MAE | Deviation between predicted and actual continuous values | Price prediction, demand forecasting, energy consumption |

Direct Metrics | Any AI Model | Model Accuracy, Model Loss, AUC-ROC, Confusion Matrix | Overall model performance and prediction confidence | General-purpose evaluation for binary/multiclass models |

Other important measures include efficiency and response time. Enterprises need chips that deliver fast results and handle large workloads without delays. High performance supports tasks like fraud detection, medical diagnosis, and real-time analytics.

Scalability

Scalability allows AI chip solutions to grow with enterprise needs. Leading chips use advanced packaging and interconnect technologies. These include chiplet integration, 3D stacking, and Through-Silicon Via (TSV). These methods boost bandwidth and lower latency. Companies like Broadcom and Tenstorrent design chips for high-speed data transfer and distributed AI workloads. Oracle Cloud Infrastructure uses massive GPU clusters and ultrafast networking to support large-scale AI training. These features help enterprises run bigger models and process more data as their needs increase.

Enterprises often face challenges such as high power demands, cooling needs, and operational complexity when scaling AI workloads. Choosing scalable hardware and infrastructure helps reduce these barriers.

Ecosystem Support

A strong ecosystem supports both hardware and software needs. Top providers offer robust software frameworks, developer tools, and cloud services. For example, Nvidia’s ecosystem includes CUDA, NeMo, and TensorRT for AI development. Their Deep Learning Institute provides training and certifications. Hardware integrates with enterprise IT systems, such as HPE servers and GigaIO’s vendor-agnostic platforms. These solutions support multi-tenancy, secure management, and easy deployment.

Security and compliance features play a key role. Many chips include cryptographic verification, secure logging, and usage controls. These features protect data and help enterprises meet regulatory requirements. Integration with existing IT infrastructure ensures smooth deployment and management. Companies like Microsoft and Apple design custom chips for their platforms, making it easier for enterprises to adopt AI at scale.

Enterprise Use Cases

Data Analytics

Enterprises use AI chips to speed up data analytics tasks. These chips include GPUs, ASICs, FPGAs, and NPUs. They help companies process large amounts of data quickly. For example, GPUs from Nvidia handle tasks like training language models and genome sequencing. Specialized chips, such as Intel’s Gaudi 3 and Graphcore’s IPU-POD, offer better price-performance and energy efficiency for specific jobs. IBM uses Gaudi 3 for fast AI inferencing, while insurance companies use Graphcore’s system to analyze claims five times faster. Cerebras Systems’ wafer-scale engine powers supercomputers for advanced AI models. These AI chips give enterprises the power to analyze data faster, save energy, and lower costs.

AI Applications

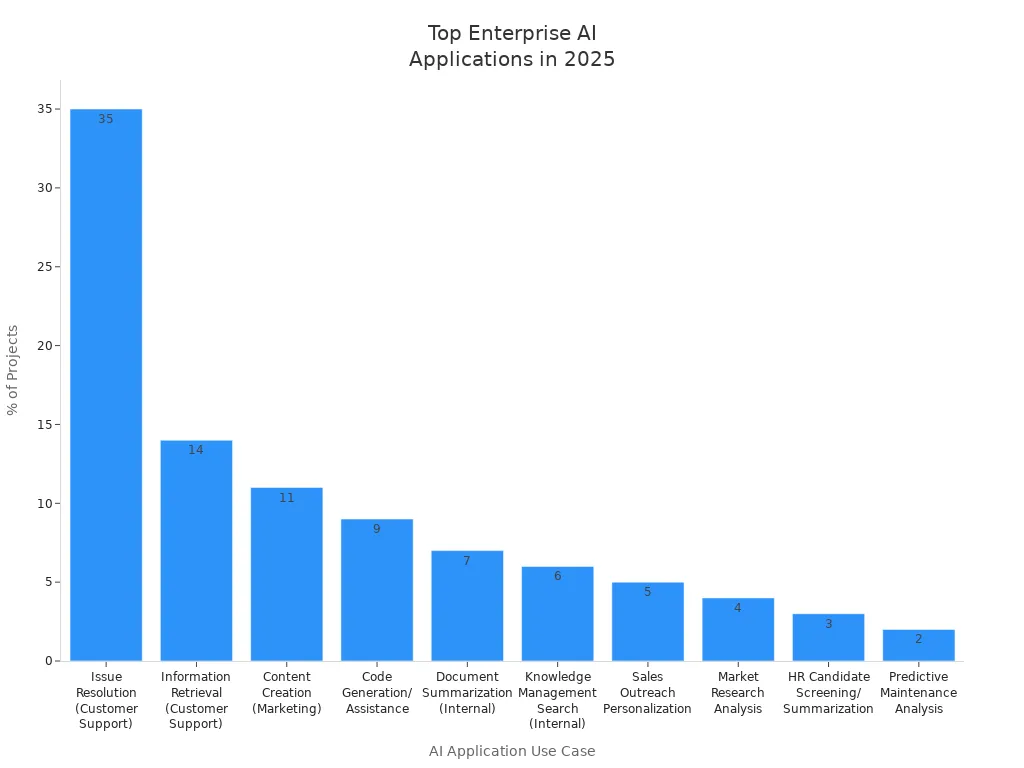

AI chips support many important applications in business. The most common uses include customer support, marketing, software development, and knowledge management. Companies use AI chips to run chatbots, create content, and help with code generation. The chart below shows the top AI application use cases in 2025 by project percentage:

Rank | AI Application Use Case | % of Projects | Key Departments | Example Tools/Companies |

|---|---|---|---|---|

1 | Issue Resolution (Customer Support) | 35% | Customer Support | Klarna AI Agent, AI Chatbots |

2 | Information Retrieval | 14% | Customer Support | Kuka Xpert, Knowledge Base AI |

3 | Content Creation (Marketing) | 11% | Marketing | Generative AI Writing Tools |

4 | Code Generation/Assistance | 9% | R&D, IT | GitHub Copilot, Amazon Q Dev |

5 | Document Summarization | 7% | Various | LLM Summarization Tools |

AI chips from Nvidia, AMD, and cloud providers like AWS and Google power these applications. They help companies train and run large language models and generative AI tools.

Industry Examples

Many industries use AI chips to solve real problems. In manufacturing, FIH Mobile uses Google’s Visual Inspection AI to automate quality checks. Nearly 100 Nvidia-powered AI factories are active, with companies like AT&T, BYD, and Capital One leading the way. Yum! Brands uses Nvidia AI in restaurants to improve service. Dell supplies AI-optimized servers for enterprise needs. The table below shows popular AI chip solutions in key industries:

Manufacturer | AI Chip/Product | Industry Applications |

|---|---|---|

NVIDIA | Grace CPU, HGX A100 platform | Healthcare, Manufacturing, Finance |

Intel | 11th Gen Core, Ponte Vecchio | Finance, Healthcare, Manufacturing |

AMD (Xilinx) | EPYC, Versal AI Core series | Healthcare, Manufacturing, Finance |

Qualcomm | Cloud AI 100 | Finance, Edge AI |

NXP Semiconductors | AI embedded chips | Manufacturing |

AI chips help companies in finance, healthcare, and manufacturing process data, automate tasks, and improve decision-making.

Comparing AI Chip Solutions

Performance Metrics

Enterprises compare AI chip solutions using clear performance metrics. These include throughput, latency, and energy efficiency. Throughput measures how many tasks a chip can handle per second. Latency shows how fast a chip responds to a single request. Energy efficiency tells how much power the chip uses for each task. Many companies use benchmarks like MLPerf to test chips on real-world AI workloads. For example, some chips excel at training large language models, while others perform better at running many small tasks at once. Enterprises should match the chip’s strengths to their main business needs.

Cost Considerations

Cost plays a big role in choosing an ai chip solution. Enterprises look at both upfront and ongoing expenses. Here are some key points:

Cloud AI solutions use pay-as-you-go pricing. This reduces the need for large upfront investments and lets companies scale as needed. It also lowers the need for dedicated IT staff.

On-premises solutions give full control and customization. They may cost less over time but require higher initial spending and ongoing maintenance.

ASICs, like Google’s TPU or AWS Trainium, often have 30–40% lower total cost of ownership than GPUs. They use less energy and work well for specific AI tasks.

GPUs offer flexibility but can cost more to run at scale due to higher power use.

Operational expenses include software, support contracts, and cloud usage. These can add up and sometimes exceed the cost of the hardware itself.

Financing options, such as leasing or cloud credits, help companies manage cash flow and support growth.

Enterprises should weigh both short-term and long-term costs when comparing solutions.

Support and Services

Long-term support and upgrade paths matter for enterprise buyers. Intel updates its chips every two years and works with partners to keep tools ready for new designs. This helps companies stay current and innovate. Penguin Solutions bundles hardware, software, and services to reduce risk and make upgrades easier. Their software can cut latency by up to 50%. Strategic partnerships, like those with SK Telecom, help create flexible data center ecosystems. These partnerships reduce the risk of technology becoming outdated. Companies also benefit from support contracts, which add value and ensure smooth operation.

Enterprises can choose from leading providers like NVIDIA, AMD, and Intel or explore innovative options from emerging companies. Each ai chip solution offers unique strengths for different workloads and business goals. Decision-makers should evaluate performance, scalability, and ecosystem support. Aligning chip choices with business strategy, data quality, and workforce readiness helps drive long-term value. As AI evolves, companies benefit by considering both established leaders and new innovators to meet future needs.

FAQ

What is the main benefit of using AI chips in enterprises?

AI chips help companies process data faster and more efficiently. They support tasks like language translation, image recognition, and customer service. These chips save time and reduce costs for businesses.

How do enterprises choose the right AI chip solution?

Companies look at performance, cost, and support. They compare how fast chips run tasks, how much energy they use, and if they fit with current systems. Good vendor support also matters.

Are AI chips secure for business use?

Most AI chips include security features. These features protect data and help companies follow rules. Some chips offer encryption and secure boot options. Enterprises should check for these features before buying.

Can AI chips work with existing IT systems?

Many AI chips work with popular servers and cloud platforms. Companies can add them to current setups. Some chips need special software or hardware, so checking compatibility is important.

See Also

Leading Advances In Medical Imaging Chip Technology 2025

Key Breakthroughs Powering Local Memory Chip Production 2025

New Developments Shaping Automotive-Grade Semiconductor Chips

Current Influences Transforming The Analog Integrated Circuit Market

Upcoming Innovations In Radar Signal Processing Semiconductor Chips